Robots.txt is the best way to control how search engines crawl and index your website.

It is a text file that instructs search engine robots which pages or files to avoid indexing on your site. Without proper management of the Robots.txt file, you risk having pages accidentally excluded from search engine results, leading to decreased organic traffic.

However, editing robots.txt can seem like a daunting task for those unfamiliar with coding. Luckily, if you have a WordPress site, there are easy and efficient ways to edit your robots.txt file, with or without using a plugin.

And in today’s article, we’ll talk about How to edit robots.txt in WordPress with the help of a WordPress plugin and without a plugin. Also, cover Disallow or Allow specific bots from crawling your WordPress website.

What is Robots.txt?

Robots.txt is a simple text file that guides search engine bots on how to crawl pages on your website. It acts as a gatekeeper, allowing you to control access to the pages you prefer to keep private or public.

The Robots.txt uses a standard protocol with specific directives to instruct search engine robots on which pages or sections of your site should be excluded from crawling and indexing. This ensures that sensitive content is not accidentally revealed in search engine results.

Creating or editing the Robots.txt file requires precise syntax to avoid unintended blocking of important pages. It uses “Disallow:” and “Allow:” commands to specify which URLs should be excluded or included in a search engine’s indexing process.

To view your site’s current Robots.txt file, simply append ‘/robots.txt’ to the end of your domain name, like so: `yourdomain.com/robots.txt`. You can also check the Robots.txt file through your search console Robots.txt checker tool.

Understanding and properly configuring your Robots.txt file is crucial for SEO optimization. It helps prevent search engines from indexing duplicate content or pages that might dilute your site’s relevance and authority.

Why Robots.txt is Important For Your Website?

The importance of Robots.txt for your website cannot be overstated. It primarily serves as the first line of defense in controlling access to your site’s content by search engine bots. This control ensures that only relevant content appears in search results, enhancing your site’s SEO.

Furthermore, an effectively configured Robots.txt file prevents search engines from indexing sensitive or duplicate content. This safeguard protects your website from potential SEO penalties that could impact your site’s visibility and user traffic.

Robots.txt allows for the efficient management of crawler resources. Restricting the areas bots can crawl, ensures that your server’s bandwidth is used optimally, improving site performance for real users.

Lastly, by using Robots.txt to guide search engine bots, website administrators can influence how their content is ranked and displayed in search results. This strategic control is essential for maintaining the integrity and relevance of your online presence.

Robots.txt Tutorial

Basic Fundamental of Robots.txt

User-agent: [user-agent name]

Disallow: [URL string not to be crawled]

User-agent: [user-agent name]

Allow: [URL string to be crawled]

Sitemap: [URL of your XML Sitemap]

Let’s talk about the fundamentals of the Robot.txt file:

- User-agent [user-agent name]: This is the search engine robot or crawler you want to give instructions to, such as Google, Bing, or GPTbot.

- Disallow [URL string not to be crawled]: Specifies the pages or directories you want to exclude from crawling and indexing.

- Allow [URL string to be crawled]: Allows specific URLs that were previously disallowed by default.

- Sitemap [URL of your XML Sitemap]: Points to your XML sitemap file location, informing search engine bots about new or updated content

Default Robots.txt File in WordPress

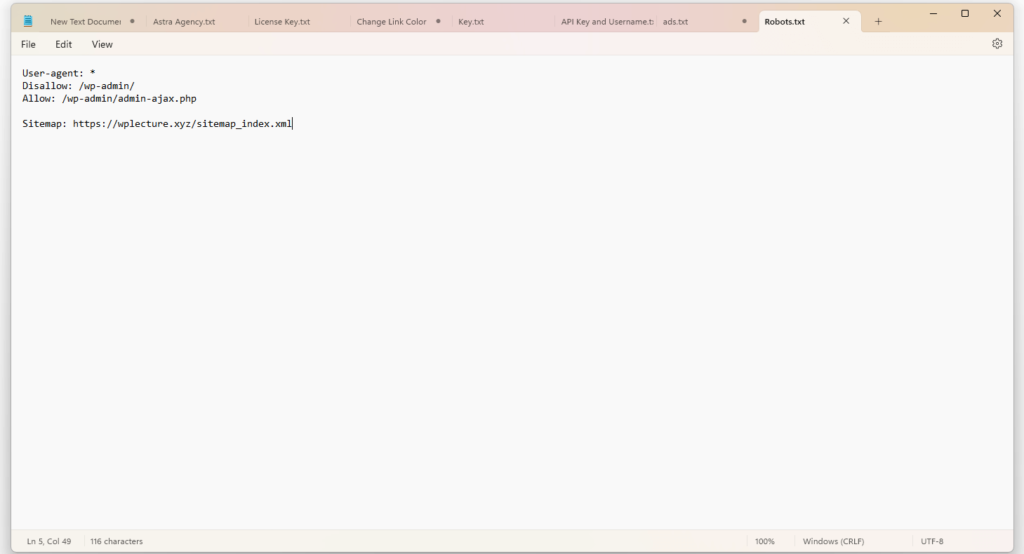

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

Sitemap: https://wplecture.xyz/sitemap_index.xml

This is the default Robots.txt file for a WordPress website. “User-agent: *” means any search engine crawler is affected by the rules that follow.

“Disallow: /wp-admin/” instructs all search engines not to crawl or index files and folders within your WordPress admin directory, preventing them from showing up in search results.

The “Allow: /wp-admin/admin-ajax.php” directive overrides the previous one, allowing bots to access only this specific file within the wp-admin folder.

Lastly, “Sitemap: https://wplecture.xyz/sitemap_index.xml” informs search engine bots where to find your sitemap file, making it easier for them to discover and index your site’s content.

How to Disallow All Bots to Crawl Your Site

User-agent: *

Disallow: /

If you wish to block all search engine crawlers from accessing your site, simply use the above code in your Robots.txt file. This will prompt bots to exclude all pages and files on your website from being indexed.

Note: Disallowing bots from accessing your entire site is not recommended unless necessary, as it can negatively impact your site’s SEO and result in a decrease in organic traffic. It is important to carefully consider the potential consequences before implementing this directive.

How to Disallow Specific Bot to Crawl Your Site

User-agent: AdsBot-Google

Disallow: /

User-agent: *

Allow: /

To disallow a specific bot from crawling your site, you first need to identify the user-agent name for that bot. This can usually be found in the bots’ “robots.txt” file.

In this example, we are blocking “AdsBot-Google” from crawling our site by using “Disallow: /”, which applies to all URLs and pages on our website. Then, we use “User-agent: *” followed by “Allow: /” to allow all other bots to crawl our site.

How to Disallow Specific Files and URLs

User-agent: *

Disallow: /what-is-wordpress/

Disallow: /how-create-wordpress-site/

Disallow: /hidden/file.html

If you want to block specific files or URLs from search engine crawlers, simply add them to the list of disallowed items in your Robots.txt file. This will prevent bots from crawling and indexing those specific pages or files.

Note: Be sure to use precise syntax when adding specific URLs or files, as even a minor mistake can result in the unintended blocking of important content. It’s best to use Google’s Robots.txt tester tool to ensure proper configuration.

How to Disallow AI Models & Chatbots to Crawl Your Site

User-agent: Google-Extended

Disallow: /

User-agent: GPTBot

Disallow: /

As AI models and chatbots become increasingly prevalent in search engine crawling, you may want to restrict their access to your site’s content. To do so, include the above code in your Robots.txt file.

For Google’s new Extended bot, we use “User-agent: Google-Extended” followed by “Disallow: /” to block all their bots from accessing our site. For GPTBot, we use “User-agent: GPTBot” followed by “Disallow: /” to do the same.

How To Edit Robots.txt in WordPress With a Plugin

There are several WordPress plugins available that help you to edit or create new robots.txt files. Some of them are:

- All-in-One SEO Pack

- Yoast SEO

- Rank Math

- WP Robots Txt

Using a plugin like this, you can easily edit or create your Robots.txt file without any technical knowledge.

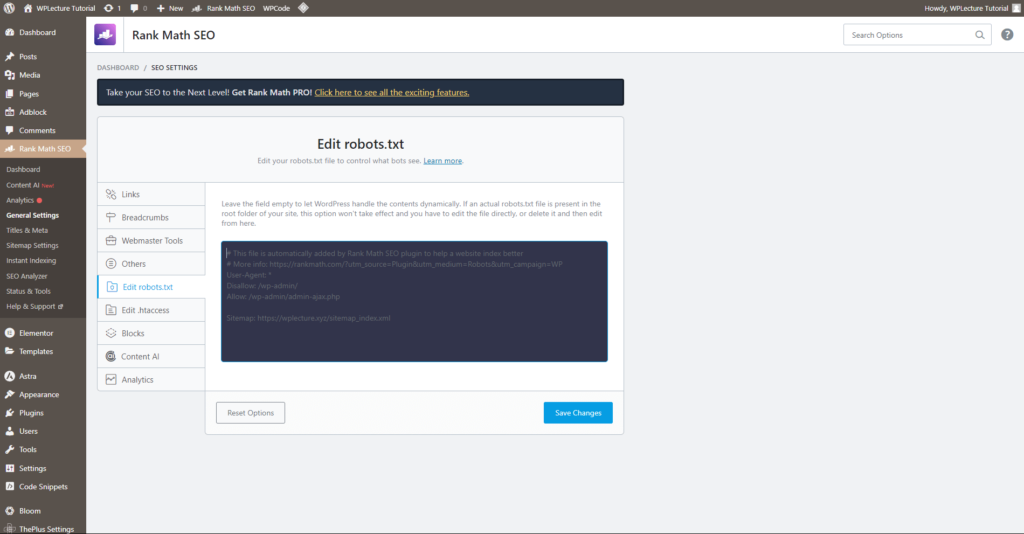

Let’s take a look at how you can edit your Robots.txt file with the help of the Rank Math SEO plugin. To edit your Robots.txt plugin, first, you need to install and activate the Rank Math plugin on your WordPress website.

Then you need to click on Rank Math SEO >> General Setting >> Edit Robots.txt, and you will find an interface to create or edit your Robots.txt file.

How To Edit Robots.txt in WordPress Without Plugin

Let’s take a look at how you can edit your Robots.txt file without using any plugin.

First, you need to create a text file, name it “robots.txt” and then open it with any text editor like Notepad or TextEdit.

Next, you can add your desired directives following the [user-agent name] and [disallow/allow] format mentioned earlier in this article. Once you’re satisfied with your changes, save the file as “robots.txt” and upload it to your website’s root directory.

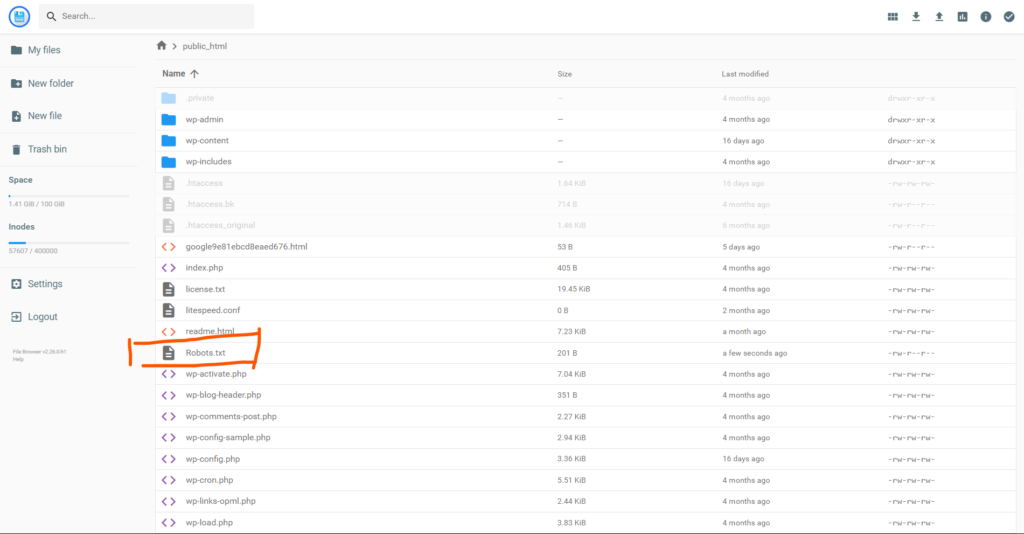

To upload the Robots.txt file to your website’s root directory click on cPanel File Manager > public_html > upload the “robots.txt” file.

If you already have a Robots.txt file in place, simply make sure to replace it with the updated version.

Note: Be cautious when editing your Robots.txt file manually, as one wrong move can prevent search engine bots from crawling and indexing your site’s content. It’s always recommended to use a plugin or consult an SEO expert for proper configuration.

FAQ

You can fix indexed pages that are being blocked by Robots.txt by checking your Robots.txt file for any errors or incorrect syntax. If everything looks correct, you may need to wait a few days for search engine crawlers to recrawl and update their index.

You can edit your Robots.txt file in WordPress by using a plugin like All-in-One SEO Pack, Yoast SEO, Rank Math, or WP Robots Txt. Alternatively, you can also manually create and upload a text file named “robots.txt” to your website’s root directory using cPanel File Manager or an FTP client.

You can access your Robots.txt file in WordPress by going to Rank Math SEO >> General Setting >> Edit Robots.txt. If you’re not using a plugin, you can create and upload a “robots.txt” file to your website’s root directory using cPanel File Manager or an FTP client.

You can update your Robots.txt file by editing the existing file or creating a new one with any necessary changes, and then uploading it to your website’s root directory. You can also use a plugin like All-in-One SEO Pack, Yoast SEO, Rank Math, or WP Robots Txt to make updates directly in WordPress. End of document.

You can manually edit your WordPress site by accessing the files and code through an FTP client or using the file manager in your hosting cPanel. This gives you access to modify any aspect of your website, including the Robots.txt file.

However, it is recommended to use a plugin or consult an SEO expert for proper configuration, as making incorrect changes can negatively impact your site’s SEO and performance.

If you can’t find the Robots.txt file in your WordPress site, it’s possible that it has not been created yet. You can create a new text file named “robots.txt” and upload it to your website’s root directory using cPanel File Manager or an FTP client.

Alternatively, you can also use a plugin like All-in-One SEO Pack, Yoast SEO, Rank Math, or WP Robots Txt to create and edit your Robots.txt file directly in WordPress. Be sure to follow proper syntax and directives to avoid unintended blocking of important content.

Conclusion

I hope this tutorial will help you to get the best idea about Robots.txt and how it can be used to control Search engines, AI models, and chatbots crawling on your site.

Remember to carefully review any changes made to your Robots.txt file, as even minor mistakes can have significant consequences for your website’s visibility in search engine results.

It’s always best to use a plugin or consult an SEO expert for proper configuration to ensure the smooth functioning of your site. You can also this default Robots.txt file and update it according to your site’s needs.

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

Sitemap: https://wplecture.xyz/sitemap_index.xml

Keep in mind that blocking access to certain pages or content may harm your SEO efforts, so use this tool wisely to optimize your website’s performance.

And lastly, if you need any more assistance or have any questions, feel free to reach out to our support team for help.